Since the release of Microsoft's Active Directory (AD), security management pertaining to users, applications, and systems has been simplified for tens of thousands of organizations. Of the Fortune 1000 companies, 90% utilize active directory as an understructure for cybersecurity and privilege access management (PAM). Although AD is self-reliant when it comes to access classifications, and identity and resource management, there are legions of risks associated with it which have evolved over the decades. Consequently, AD has become a prime target for threat actors to launch identity-centric attacks. According to PurpleSec, data breach costs rose from $3.86 million to $4.24 million in 2021, the highest average total cost in the 17-year history of this report.

Moreover, information in and around the urgency of organizational risks, inclusive of mitigation efforts and costs, is something decision makers require to prioritize pertinent risks. This led to the industry-wide incorporation of risk assessment for identities.

Identity risk assessment is a systemic approach towards the evaluation of an organization's AD. It involves conducting security assessments by identifying, prioritizing, scoring, evaluating, and ultimately, remediating security risks posed by assets, security, and policy misconfigurations, or other vulnerabilities present in the AD environment that can potentially, be leveraged by attackers if exploited. The overarching goal of identity risk assessment is to reduce identity-related risks to increase the overall AD security.

An AD for an organization is at its best when its new and fresh. AD security efficiency is inversely proportional to the number of users, applications, resources, operating systems and other components; in other words, the more the number of identities or users, applications or resources, the less efficient an AD is. While this notion has a high degree of truth to it, it conflicts with the primary purpose of AD, which is to streamline access, user, resource and security management. There are two ways to circumvent this conflict- either to ensure AD security best practices are strictly followed and updated consistently, which can be challenging, or to introduce, incorporate, and enforce identity risk assessment to address the complex security landscape.

But the best way would be to fuse AD security best practices and identity risk assessment. Via this fusion, organizations can discover intricacies between them to tailor their approach towards security and risk management.

Risk assessment has implications that go beyond obvious presumptions. It's a measure of an organization's AD health and security and, as the famous saying goes by Peter Drucker, the science of business management guru, "you can't manage what you can't measure." The contextual inference of the quote is that it's exceptionally difficult to track and assess AD components if they are tangled in a chaotic manner.

Risk can manifest in various forms. Depending on an organization's desired concluding factors, it's possible to represent the risks and their severity both numerically and non-numerically. There are several types of risk assessment methodologies that evaluate the risk posture of an organization; but it's essential to note that each type comes with tradeoffs. Some of the most prevalent risk assessment methodologies include:

Quantitative risk assessment is a numerical evaluation of risks. In other words, numerical values are assigned to organizational assets that pose risks in order to calculate the likelihood and impact probabilities if the threat materializes. Quantitative risk assessment is an analytical process that demands a high level of meticulousness and exactitude, making it more scientifically complex than other types of methodologies. Results of quantitative risk assessment can be translated into financial terms and values, and presented to board members for ease of comprehension.

The side effect of quantitative risk assessment methodology is that depending on the type of industry, organization, and assets, a proportion of risks may not be easy to quantify. Since this methodology prides itself on calculating statistical probabilities—and thereby, having an inherent sense of objectivity— forceful attempts to inject unquantifiable risks into this process will call for discernment capabilities, which can potentially diminish the methodology's objectivity. Reduction in objectivity is an added weakness because, as mentioned earlier, almost all risk assessments are subjective to a degree, and it's a challenge to reach a specific threshold of objectivity.

Additionally, a probable downside of this type of methodology can be the communication of the results outside the boardroom. This issue can be resolved to an extent, given that organizations have the internal expertise to both approach the assessment and communicate the results in an unambiguous way.

A plus point of this methodology is that it assesses risks based on solid numbers and data-driven analysis. Therefore, it's inherently objective to a degree. Notwithstanding this, quantitative risk assessment requires data-accuracy and apt statistical analysis to effectively derive possible risk exposures.

On the other hand, qualitative risk assessment is a non-numerical, or characteristic evaluation. In other words, characteristics of threats are considered in order to derive the likelihood and impact probabilities. Typically, qualitative assessment categorizes risks using a scale with low, medium, high, and critical ratings to represent the levels of severity.

The plus point of this methodology is that it's easy to approach and results can be effortlessly communicated beyond boardrooms. Results can be comprehended by almost all organizational stakeholders owing to the lack of numerical aspects. Severity ratings like low, medium, and high are used as the risk scale.

But there is a tradeoff. Qualitative risk assessment is more subjective than its counterpart—quantitative risk assessment—because it does not factor in numerical values. Typically, its reliance on expertise and judgment calls is higher, and more often than not, it is used when organizations lack quantitative data, or when it's complicated to quantify or measure the impact of risks in monetary or financial terms. Evaluating risks without numerical values also means that this methodology is susceptible to bias, and depends on the perceptions of the risk assessor or risk assessment team.

Here are the primary differences between quantitative and qualitative risk assessment methodologies. (This table was adapted from the Information Systems Risk Assessment Methods study published by ResearchGate.)

| Aspect | Quantitative methodology | Qualitative methodology |

|---|---|---|

| Level of objectivity/subjectivity | Results are mainly based on objective measurements | Results are mainly based on subjective measurements |

| Level of numerical relevancy | Benefit-cost ratio and issues are relevant | Numerical and monetary values are not as relevant |

| Level of data requirement | Requires substantial historical and analytical data such as threat frequency, likelihood, and more | Requires relatively less effort for monetary value and threat frequency development |

| Level of complexity | Requires substantial mathematical concepts and tools and hence, is a complex process | Does not require substantial mathematical concepts and tools; as such, the process is relatively uncomplicated |

| Level of expertise | Calls for technical and security expertise; usually performed by technical and security staff | Does not obligate technical and security expertise; possible for non-technical and non-security staff |

This approach is a combination technique of quantitative and qualitative risk methodology. In contrast to solely quantitative methodology that relies on numbers, and a solely qualitative methodology that relies on subjective perceptions and expert analysis, semi-quantitative methodology combines the overarching principals of both, striking a satisfying balance. This method retains statistical objectivity while also accommodating subjective interpretations and judgment calls based on the level of expertise and numerical values.

This methodology can utilize a numerical scale ranging from 1-10 or 1-100. That said, the scale is arbitrary and can be customized depending on organizational preferences and suitability. Once the range of the scale is finalized, it is then divided into three or more sub-ranges that will signify the qualitative level of severity of risk. Each quantitative subrange corresponds to a particular qualitative group. This example showcases how it's done:

| Quantitative scale | Qualitative scale |

|---|---|

| 8-10 | High |

| 4-7 | Medium |

| 1-3 | Low |

The three widely known approaches that prioritize different aspects of risk assessment are:

The focus is on the identification and evaluation of Tier 0 (critical) assets present in the AD environment. Tier 0 includes accounts, groups, and other assets that have direct or indirect administrative control of an AD forest, domains, or domain controllers, and all the assets in it. It can also include group policies, sensitive data, and anything around those assets. The objective of an asset-based approach towards risk assessment is to gauge and comprehend each asset's organizational value and subsequently, to decipher the potential impact if it were compromised and exploited.

This approach is aimed at prioritizing efforts and resources for the protection of the most valuable or critical assets. It also ensures that security measures and organizational objectives are aligned.

The focus is on the identification and analysis of vulnerabilities present in the AD environment. Vulnerabilities are equivalent to AD system or operational flaws that can be exploited by attackers if compromised. The objective of this approach is to discover and address flaws like outdated software, misconfigurations, and security gaps that could potentially result in breaches or exploitation via vulnerability scanning and assessments. Subsequent to the discovery of vulnerabilities comes prioritization and, eventually, addressing them which hinges on the level of risk and potential impact.

The focus is on identification and comprehension of potential threats and possible attack scenarios targeting the AD environment. This approach relies on analyzing attackers' motivations, tactics, techniques, and procedures (TTPs). Mitigation efforts for specific scenarios are derived from security measures that are tailored strongly based on potential threats. It utilizes concepts like threat modeling and encourages taking proactive measures revolving around identity threat detection and response (ITDR) to fight against attackers. Exemplary factors considered in threat modeling include but are not limited to: insider threats, external attackers, and advanced persistent threats.

The advantage of a threat-based approaches is that it's formulated in a way to extend its discovery capabilities beyond the cybersecurity infrastructure, to where the psychology of attackers and their possible rationales becomes a relevant factor.

Risk assessment standards establish the overarching guidelines and vital frameworks for evaluating and managing distinct types of risks across industries and domains. These standards offer structured methodologies to identify potential threats, assess their likelihood and potential impacts which feeds into the eventual, decision-making phase. Depending on the context, different types of risk assessment standards exist, each tailored to specific environments like AD and hybrid IAM. Apart from bolstering risk management practices, these standards also stimulate consistency, transparency, and accountability in addressing potential threats.

The DREAD acronym stands for Damage potential, Reproducibility, Exploitability, Affected users, and Discoverability. But each item requires that pertinent questions be answered to assign the appropriate ratings to each threat, risk or vulnerability:

In comparison to other risk assessment standards, the DREAD model is relatively straightforward. This model takes into account the above-mentioned DREAD items. For a particular vulnerability in the AD, the risk assessor assigns rating values to each item based on a scale of high, medium, and low in which, severity is represented by the rating values and manifested in the form of numbers.

Once the rating value of all the items for a particular threat in the AD is derived, they are added and the results are compared with the table below which delineates the rating values and their correspondent numerical values:

| Risk rating (severity) | Numerical equivalent (values) |

|---|---|

| High | 12 - 15 |

| Medium | 8 - 11 |

| Low | 5 - 7 |

CVSS stands for common vulnerability scoring system. It's an open framework for IT vulnerability-scoring and a different approach towards qualitative risk analysis. When considering this risk assessment standard, it's important to be aware that CVSS is a measure of vulnerability-severity and not a measure of overall risk. It's a well-suited standard for organizations seeking precise and consistent vulnerability severity scores. CVSS can be utilized in two ways for different purposes:

That being said, the CVSS standard is composed of three primary metric classifications that are vulnerability-oriented:

Note: While the Base Metric is mandatory for CVSS, including Temporal and Environmental Metrics depends on whether they are contextually relevant and if there is enough information about them. Base score is the true derivative while Temporal and Environmental scores can increase or decrease the Base score.

Each primary metric is comprised of sub-metrics that are used to derive the score of the primary metrics. Sub-metrics for each primary metric include:

Base: Is the mandatory overarching metric of this model which enterprises resort to in most situations. It's composed of two sub-metrics:

Temporal: Characteristics of a vulnerability are measured in correspondence to it's current status. Temporal score is provided by the vendor or analyst. This metric improves the Base score to reflect the Temporal characteristics involved around those vulnerabilities. It's composed of the following sub-metrics:

Resulting scores of Temporal metrics have the ability to affect the Base score if factored in. For example, they can increase or decrease the base score.

Environmental: This metric improves the Base score to reflect the vulnerabilities' environmental characteristics. In contrast to the Base score, the end-user calculates the Environmental group score. Organizations should factor in Environmental metrics to infer and gain true context of vulnerabilities via the consideration of these factors:

Environmental sub-metric classifications include:

| Score Range | Severity Level |

|---|---|

| 0.0 | None |

| 0.1 - 3.9 | Low |

| 4.0 - 6.9 | Medium |

| 7.0 - 8.9 | High |

| 9.0 - 10.0 | Critical |

CVSS scoring ranges is from 0 to 10. Subscore ranges (0.1 - 3.9, 4.0 - 6.9, etc.) are directly proportional to severity levels (Low, Medium, etc.) and thus, correspond to particular severity levels

The only mandatory primary metric in CVSS is the Base score. The scores of the sub-metrics involved (Exploitability and Impact subscores) are calculated to derive the overall Base score, which is calculated using a formula that weights every subscore.

To calculate the Temporal score, the one performing this assessment will need to multiply the Base score with the three sub-metrics of the Temporal metric (Exploit Code Maturity, Remediation Level, Report Confidence).

Environmental score involves a complex calculation relative to other scoring metrics. The five Environmental sub-metrics are used to recompute the Base and Temporal scores for a more precise assessment of the vulnerability-severity. Keep in mind that Environmental scores are calculated by the end user for a further refined evaluation of the severity of a vulnerability.

Conducting the CVSS process with the inclusion of Temporal and Environmental metrics makes the final evaluation of the severity of the vulnerability more precise and real. Vulnerability severity can also be calculated using the CVSS calculator.

OWASP is the acronym for Open Web Application Security Project. Primarily, the OWASP risk model's focus is on the identification and mitigation of security risks associated with software applications. OWASP's approach towards risk analysis is a standard approach, in other words, it calculates risk by calculating the likelihood and impact and then using the formula below to calculate the overall risk: Overall Risk = Likelihood * Impact

Note: The OWASP risk model is considerably more complex than DREAD or CVSS. It involves different types and scales of factors to consider in likelihood and impact calculations.

According to OWASP, there are six steps involved in conducting the risk assessment using the OWASP standard:

It's no surprise that the first step is to identify the risks that need to be rated. Establishing the risks that need to be rated is done by accumulating information associated with the threat agent considered, attack techniques that would be used, the vulnerabilities involved, and impacts of successful exploits. Since there's always a possibility of multiple-attacker groups or potential business impacts, it's recommended that you consider the worst-case scenario that will produce results with highest overall risks.

As soon as the risks are identified, the assessor then needs to estimate the "likelihood" to determine how serious the risk is. Likelihood within the context of risk assessment alludes to how likely a specific vulnerability will be detected and exploited by attackers.

There are two sets of factors involved in the likelihood determination, namely, Threat Agent Factors, and Vulnerability Factors. The overarching scale ranges from 0 to 9 and it's necessary to keep in mind that each factor has a defining question and associated with a set of likelihood ratings with pertinent sub-ranges.

These factors are associated with the threat agents in question. The objective is to assess the probability of a successful attack orchestrated by this specific group of threat agents.

| Threat agent factors | Defining questions | Likelihood ratings |

|---|---|---|

| Skill level | How technically skilled is this group of threat agents? | No technical skills (1), some technical skills (3), advanced computer user (5), network and programming skills (6), security penetration skills (9) |

| Motive | How motivated is this group of threat agents to find and exploit this vulnerability? | Low or no reward (1), possible reward (4), high reward (9) |

| Opportunity | What resources and opportunities are required for this group of threat agents to find and exploit this vulnerability? | Full access or expensive resources required (0), special access or resources required (4), some access or resources required (7), no access or resources required (9) |

| Size | How large is this group of threat agents? | Developers (2), system administrators (2), intranet users (4), partners (5), authenticated users (6), anonymous Internet users (9) |

*The scale generally ranges from 1 to 9, with 1 indicating the least threat and 9 indicating the greatest threat.

These factors are associated with the vulnerability in question. The objective is to assess the probability of the discovery and exploitation of a specific vulnerability.

| Vulnerability factors | Defining questions | Likelihood ratings |

|---|---|---|

| Ease of discovery | How easy is it for this group of threat agents to discover this vulnerability? | Practically impossible (1), difficult (3), easy (7), automated tools available (9) |

| Ease of exploit | How easy is it for this group of threat agents to actually exploit this vulnerability? | Theoretical (1), difficult (3), easy (5), automated tools available (9) |

| Awareness | How well known is this vulnerability to this group of threat agents? | Unknown (1), hidden (4), obvious (6), public knowledge (9) |

| Intrusion detection | How likely is an exploit to be detected? | Active detection in application (1), logged and reviewed (3), logged without review (8), not logged (9) |

There are two kinds of impacts when trying to ascertain a successful attack's potential consequence:

Out of the two, business impact has a higher degree of relevancy and takes precedence. The issue is that Not all employees, risk assessment teams, or the overall IT division necessarily have access to the level or amount of information required to assess a cyberattacks impact on business. But if this were true, considering the technical impact over business impact is plausible. This scenario would require substantial information on the technical impacts for the business risks to be determined.

The overarching scale ranges from 1 to 9 (1 representing the lowest threat and 9 representing the highest threat) and each factor has a defining question and associated set of impact ratings with pertinent sub-ranges.

These factors are aligned with conventional security concerns including confidentiality, integrity, availability, and accountability. Each factor is intended to gauge the impact on the infrastructure if a vulnerability is exploited.

| Technical impact factors | Defining questions | Impact ratings |

|---|---|---|

| Loss of confidentiality | How much data could be disclosed and how sensitive is it? | Minimal non-sensitive data disclosed (2), minimal critical data disclosed (6), extensive non-sensitive data disclosed (6), extensive critical data disclosed (7), all data disclosed (9) |

| Loss of integrity | How much data could be corrupted and how damaged is it? | Minimal slightly corrupt data (1), minimal seriously corrupt data (3), extensive slightly corrupt data (5), extensive seriously corrupt data (7), all data totally corrupt (9) |

| Loss of availability | How much service could be lost and how vital is it? | Minimal secondary services interrupted (1), minimal primary services interrupted (5), extensive secondary services interrupted (5), extensive primary services interrupted (7), all services completely lost (9) |

| Loss of accountability | Are the threat agents’ actions traceable to an individual? | Fully traceable (1), possibly traceable (7), completely anonymous (9) |

Although business impacts springs from technical impacts, each requires in-depth visibility into what is vital to the organization's business matters. Moreover, business impact insights are vital for C-suite executives as the investment to address and fix security problems is justified by the business risk. Note that business impacts are more unique and significant to organizations compared to technical impacts, and even threat agents, or vulnerability factors.

| Business impact Factors | Defining question | Impact ratings |

|---|---|---|

| Financial damage | How much financial damage will result from an exploit? | Less than the cost to fix the vulnerability (1), minor effect on annual profit (3), significant effect on annual profit (7), bankruptcy (9) |

| Reputation damage | Would an exploit result in reputation damage that would harm the business? | Minimal damage (1), Loss of major accounts (4), loss of goodwill (5), brand damage (9) |

| Non-compliance | How much exposure does non-compliance introduce? | Minor violation (2), clear violation (5), high profile violation (7) |

| Privacy violation | How much personally identifiable information could be disclosed? | One individual (3), hundreds of people (5), thousands of people (7), millions of people (9) |

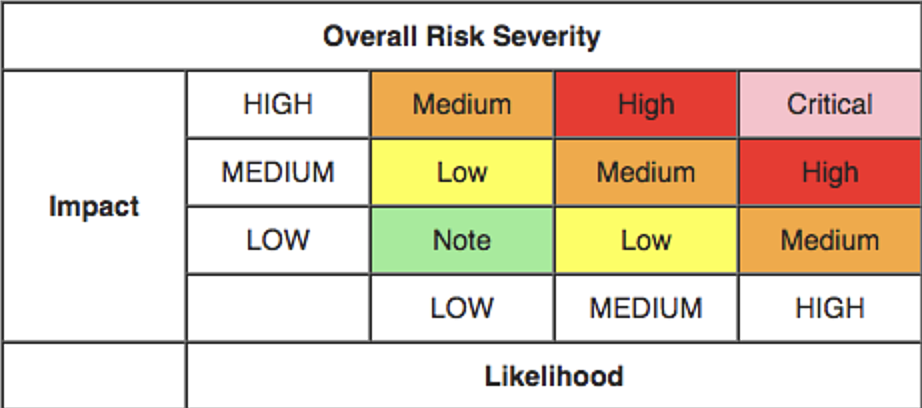

The next step is to determine the severity of risk via likelihood and impact combination using a correlation matrix. Depending on whether the assessor has access to substantial business information, business impact scores should be considered over technical impact information; if not, then technical impact information should be considered instead. The correlation matrix displayed below is used to map the intersection between likelihood and impact to determine the overall risk severity:

Once it's clear how serious all the potential risks are, the next step is to decide which risks to fix first. This decision is based on how severe the risks are. The ones labeled as "Critical" or "High" should be dealt with before the others because they pose the biggest threat to the organization.

During this decision-making process, there are some things to consider. Let's imagine a situation: Risk D is rated as having a medium level of severity. This means it's in the middle of the priority list. On one side, Risk D is common and costs the company about $5,000 every year. On the other side, fixing this risk in a way that will be effective in the long run would cost around $200,000.

Here's where it gets interesting. Sometimes, it might make sense to accept the yearly cost of $5,000 for Risk D. This is because there are other, more important risks to deal with first (and these are costing more than $5,000 each year). Also, the cost of fixing Risk D is extremely high (and the ROI can take years). This scenario shows us that it's not always the best choice to fix every single risk. Instead, a business might choose to incur the cost of $5,000 each year for Risk D. Spending $200,000 to fix it might not be worth it, especially if it's not a top priority and doesn't cause as much harm as other risks.

According to OWASP, having a risk ranking framework that is customizable for a business is critical for adoption. The reason for this is the amount of time that can go to waste wrangling about risk ratings when they're not corroborated by a model such as OWASP. The desired results can be achieved in a more efficient manner if the model itself is customized to match the perceptions of people involved regarding the seriousness of a risk. Some common ways to customize this model are:

The National Institute for Standards and Technology's Special Publication 800-30— Guide for Conducting Risk Assessments— is probably one of the most complex models out there; but not without it's perks.

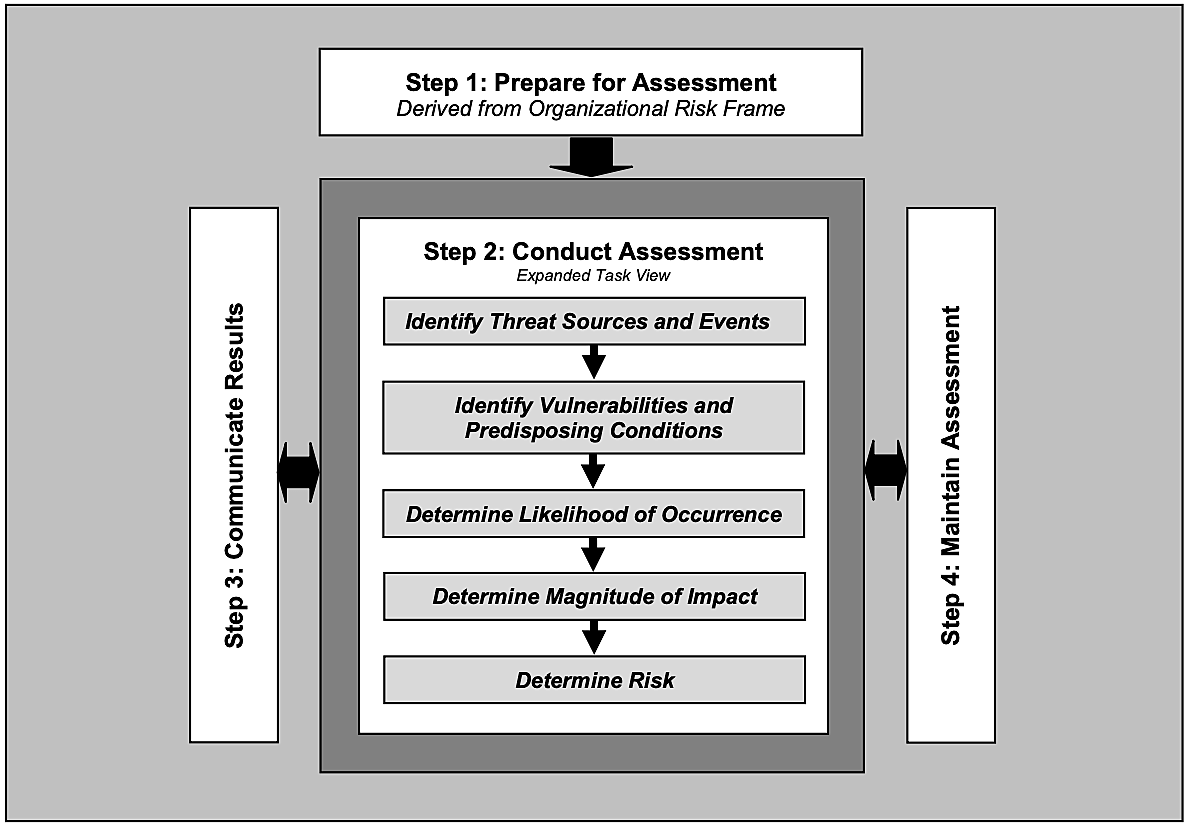

In their publication, NIST clearly defines their risk assessment process. The image below illustrates the basic steps involved:

The steps involved in conducting an adversarial risk assessment:

The first step is is to detail all aspects of the system to be evaluated using the NIST SP 800-30 risk assessment standard. The system's components and data flow for its hardware, software, network environments, and other external systems' interfaces need to be defined clearly. Once the assessment scope has been defined and all the relevant aspects of the system are taken into consideration, the assessor can then move on to the next step.

For the second step, the assessor initiates the risk assessment process to identify potential threats, detect internal and external vulnerabilities in the system, and determine threat vectors by reviewing attack history and correlating it to the insights obtained through predictive analysis.

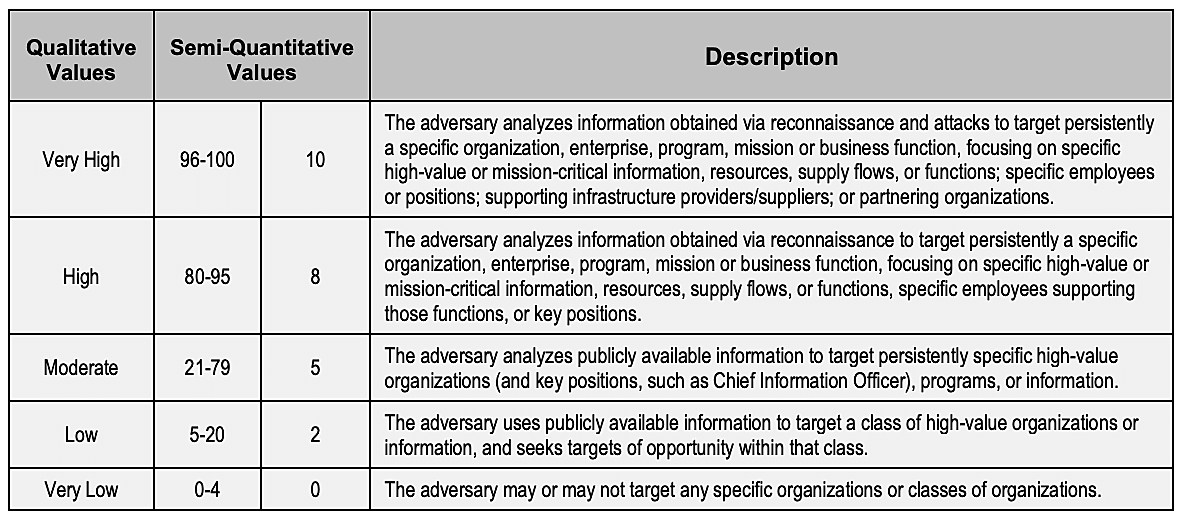

The assessor then needs to identify all the possible threat sources using an assessment scale for three considered factors:

After threat source identification, the next aim is to identify and determine the relevance of threat events. Potential threat events are established based on the considered vulnerabilities, which can differ from one organization to another, and NIST recommends characterizing the chosen vulnerabilities by TTPs. The document, NIST SP 800-30 Rev. 1, has a detailed characterization of threat events by TTPs.

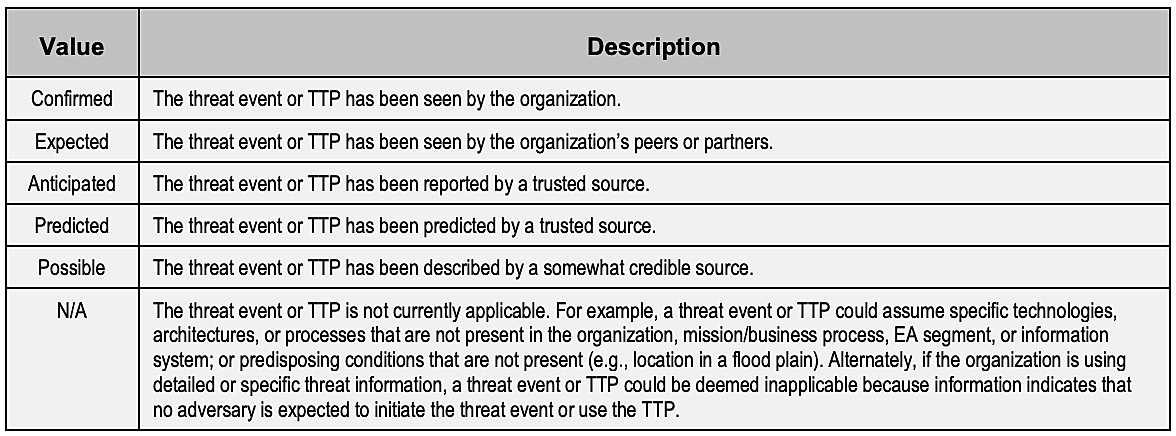

Here is the NIST assessment scale for ranking the relevance of threat events:

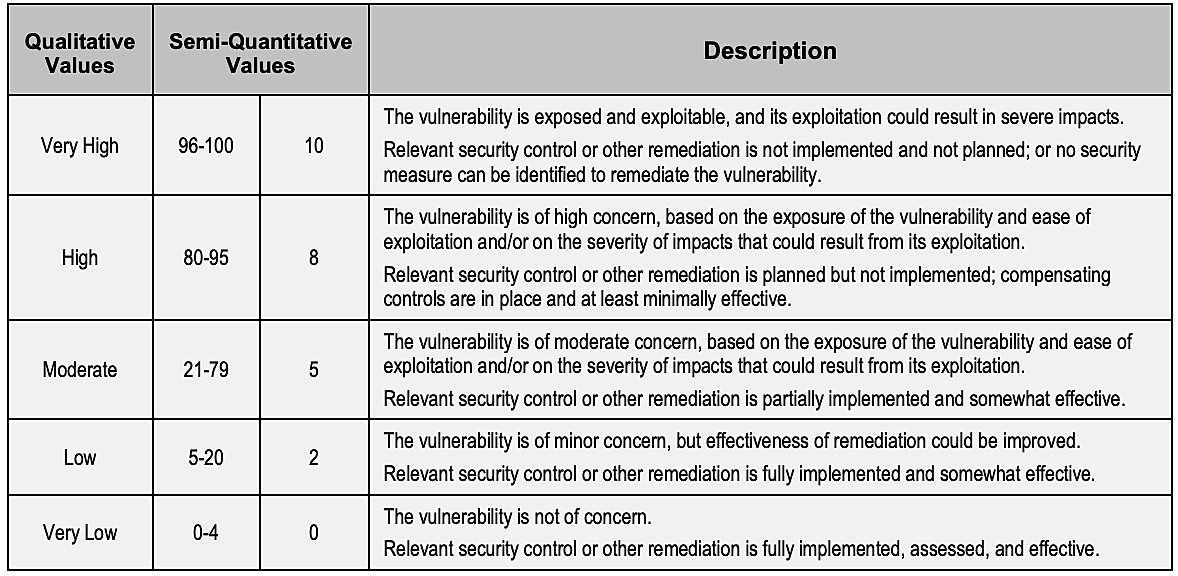

In this step, the objective is to identify and ascertain how severe each vulnerability is. Note that an organization can choose to skip this step if it already has decided on a set of risks and vulnerabilities it wants to target.

Next, previous risk assessments are scrutinized, and the reviews and comments from auditors are evaluated. Subsequently, security needs are mapped in contrast to the infrastructure-security test results.The advantage over here is that NIST SP 800-30 provides the ability to extract active vulnerabilities present in each defined system boundary.

Where the risk assessment is low, or where information about past assessments is not substantial or is not available, it is still possible to consider factors such as vulnerability exposure, ease of discoverability, and ease of exploitation.

Here is the NIST assessment scale for ranking the vulnerability severity:

This step is optional. NIST SP 800-30 evaluates the existing system controls in place, and any additional controls that need to be defined depending on the requirement. Ultimately, the assessor should work with a comprehensive list of existing and planned controls.

Unlike ISO 27005, an international standard for IT risk assessment where the control analysis preceded vulnerability analysis, NIST SP 800-30 identifies system and identity-related vulnerabilities from the ground up prior to the incorporation of mitigation by already existing controls.

This results when the information gathered in the first four steps establishes the primary weaknesses in the identity environment. The assessor needs to determine how likely it is for threats to materialize. This step is equivalent to the preparation of threat profiles under the OCTAVE approach.

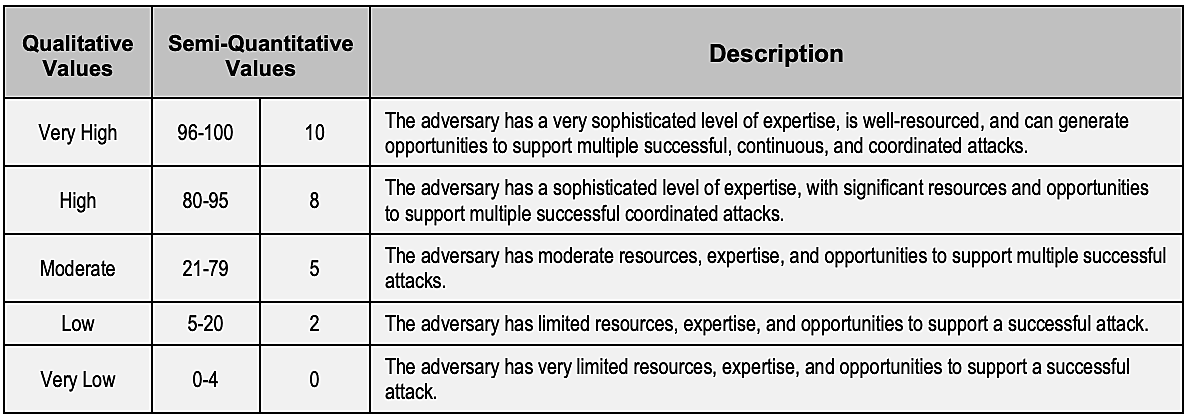

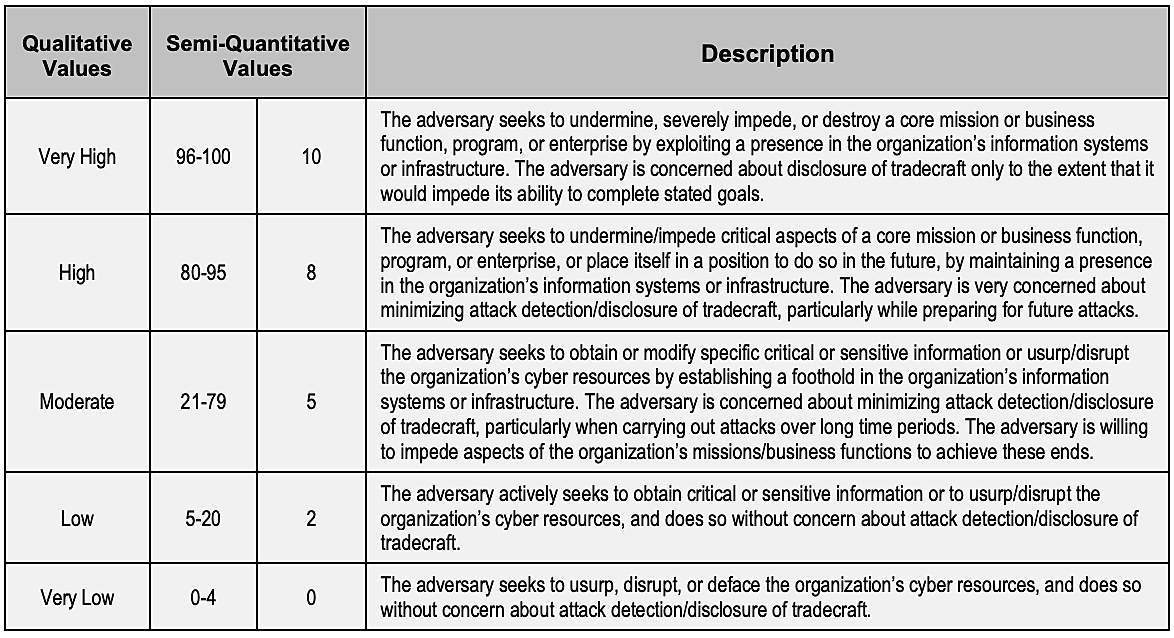

This is a process where each threat is given a likelihood rating. There are two assessment scales involved:

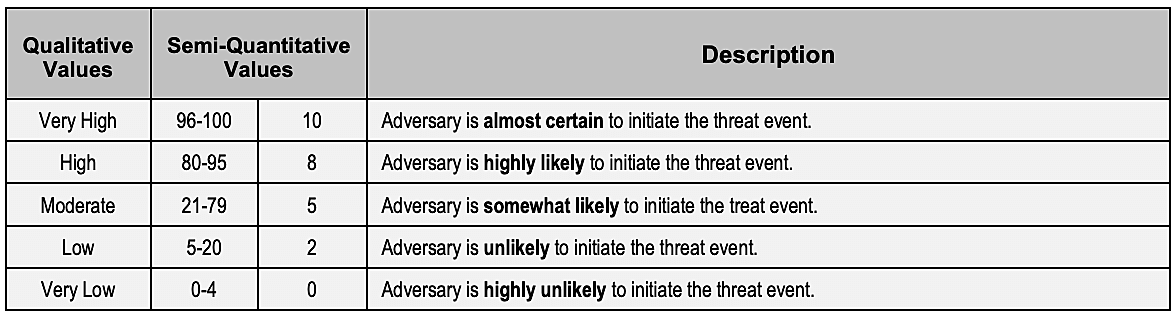

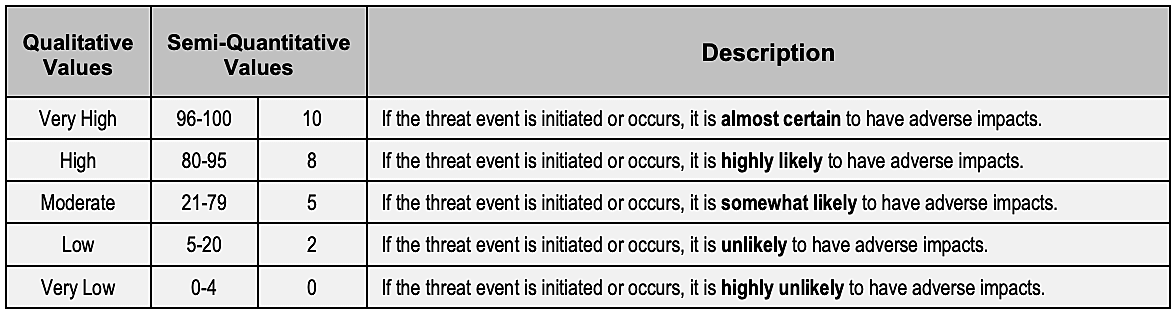

The first process determines the likelihood of a threat event initiation (adversarial), in which the assessor assigns rating values ranging from 0 to 100, or 0 to 10. Either can be further divided into sub-ranges with corresponding qualitative values.

Here is the NIST assessment scale for ranking the likelihood of threat event initiation (adversarial):

The second thing to determine is the likelihood of a threat event resulting in adverse impact. Again, the assessor is required to assign rating values ranging from 0 to 100 and/or 0 to 10; and the sub-ranges involved have a qualitative correspondent.

Here is the NIST assessment scale for ranking the likelihood of threat event resulting in adverse impact:

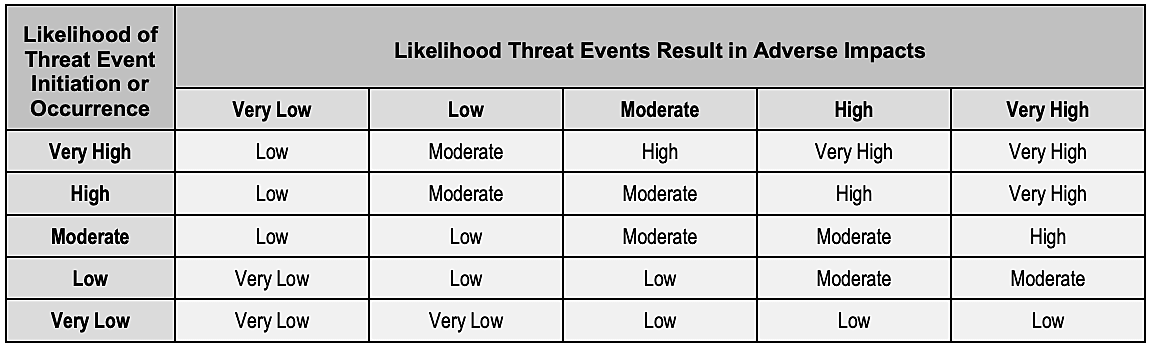

Once both likelihood calculations are completed, the final step in this stage is determining the overall likelihood by referencing the assessment scale below. Using this correlation matrix, the assessor can map the overall likelihood by considering threat event initiations and the resulting threat events as adverse impacts.

Here is the NIST assessment scale for ranking the overall likelihood:

Example: If Risk A has a qualitative rating of "Low" for likelihood of threat event initiation and "High" for likelihood of threat events resulting in adverse impact, the overall likelihood would be "Moderate."

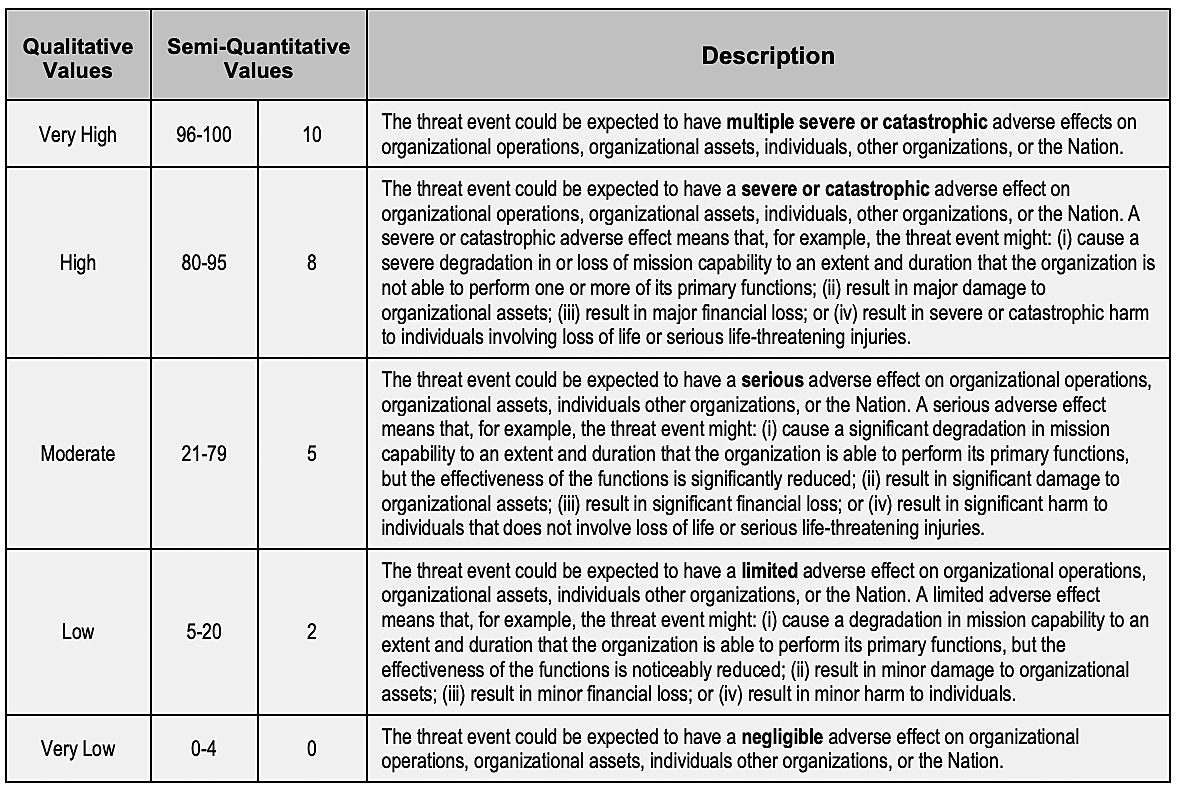

Once likelihood has been determined, the next step is to determine the impact of threat events, for example how severe the impact would be on the business if a this vulnerability is exploited. Usually, impact factors include:

Exemplary types of impact stated by NIST include :

Note: Please remember that the factors for determining impact can be highly subjective and change according to organizational needs, suitability, and preferences.

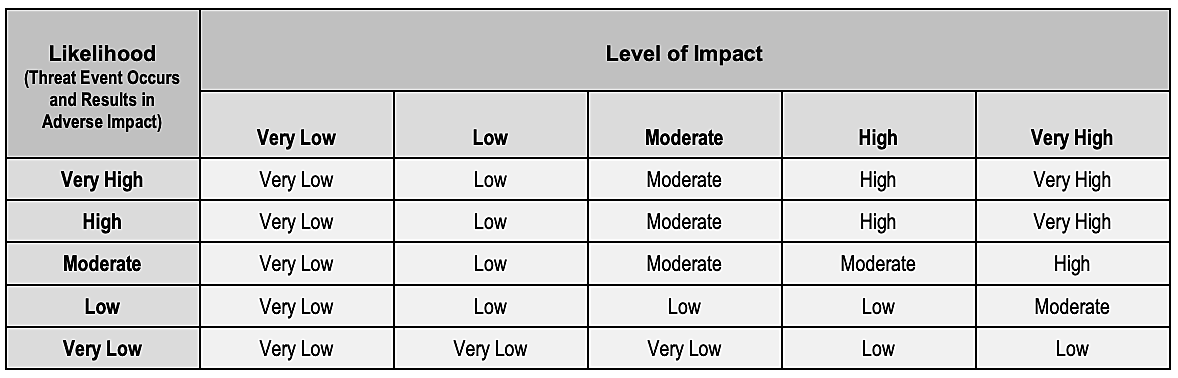

This is the final step pertaining to numerical calculation and analysis. The level of risk is determined in a qualitative manner by correlating the threat likelihood and magnitude of impacts using the correlation matrix. The qualitative outputs can then be quantified into risk ratings or risk scores.

Here is the NIST correlation matrix for determining the qualitative level of risk:

For example, Risk B has a likelihood rating of "Moderate" and an impact rating of "High." This means that the overall rating of Risk B is "Moderate."

A "Moderate" qualitative rating corresponds to a semi-quantitative rating of 21 to 79 out on a scale of 0 to 100 or 3 to 5, according to the NIST'S assessment scale:

Therefore, according to the assessment scale, Risk B has a qualitative rating of Moderate, and a semi-quantitative rating of 21 to 79.

In this post-numerical calculation and analysis stage, the concluding control recommendations are suggested. Those recommendations could be concerned with a reduction in the threat likelihood or the impact-mitigation to decrease the risk score.

Consider the following scenario: Organization X wants to conduct a risk assessment and needs to determine which risk factors to evaluate.

The first decision is whether to conduct the risk assessment within a particular division or across the entire organization. Following this, it's possible to determine a suitable methodology to utilize. Factors that influence decisions can include reflect the organization's type of industry, its location, and size.

Others factors are security and regulatory frameworks that differ by region and which might require a specific type of risk methodology for regulatory compliance.

As mentioned before, all risk assessment methodologies are subjective and ultimately, the best-suited risk assessment methodology for an organization can be finalized by considering all the factors recommended and deemed necessary by the risk standard, organization, and regulatory frameworks.