If you frequent the internet, you must have come across ChatGPT, OpenAI's chatbot, which many compare to Google's search engine. While it's popularity continues to increase because of it's user-friendly interface, cybercriminals are now using it to their advantage.

The AI-powered chatbot was introduced by OpenAI on November 30, 2022.

The AI-powered chatbot was introduced by OpenAI on November 30, 2022.

Artificial intelligence (AI) has been the talk of the town for quite a while now, and the world has seen the various ways in which it can be put to good use. But like all technological developments, AI in the wrong hands could spell disaster.

While ChatGPT's rise is thanks to it conversational interface, the tool's popularity cannot be blamed for the recent spike in AI-based cyberattacks. In fact, the cyberworld has long been expecting AI-based attacks to see prolific growth.

This isn't surprising, seeing how prior to ChatGPT's fame, Traficom's The security threat of AI-enabled cyberattacks report, predicted a prominent increase in AI-based attacks, in the next five years.

The report talks about possible attack techniques hackers can use to carry out AI-based attacks like spear phishing or malware coding. Here's how cybercriminals are using ChatGPT to execute these attacks.

Spear phishing attacks and impersonation: Attackers are drafting convincing phishing emails, impersonating senior management or other important officials using ChatGPT. The emails are pretty persuasive and could make users or guileless employees click on malicious links.

Malware communication coding: According to Check Point Research, hackers have posted screenshots of using the application to create malware codes that can be used to carry out a range of execution-based attacks, including PowerShell attacks.

Right now, more rampant AI-based attacks are being held back. This is because a lot of cybercriminals prefer other attack techniques over those that involve AI because they are under the notion that AI is complicated. Many do not have enough technical knowledge to execute them. But this could change quite quickly.

Script kiddies are already using AI-based platforms like ChatGPT to write new malware codes, and more experienced cybercriminals may start using them extensively in the future. This could lead to a large-scale adoption of AI-based software for executing cyberattacks. On top of this, voice automation in AI could lead to more efficient vishing (voice phishing) attacks.

The fast-paced world of AI might be difficult to catch up with, but at the end of the day, a phishing email, whether AI-generated or drafted by a human being, is a social engineering attack. Organizations can combat this from the get-go through sufficient awareness training and investing in the right cybersecurity tools.

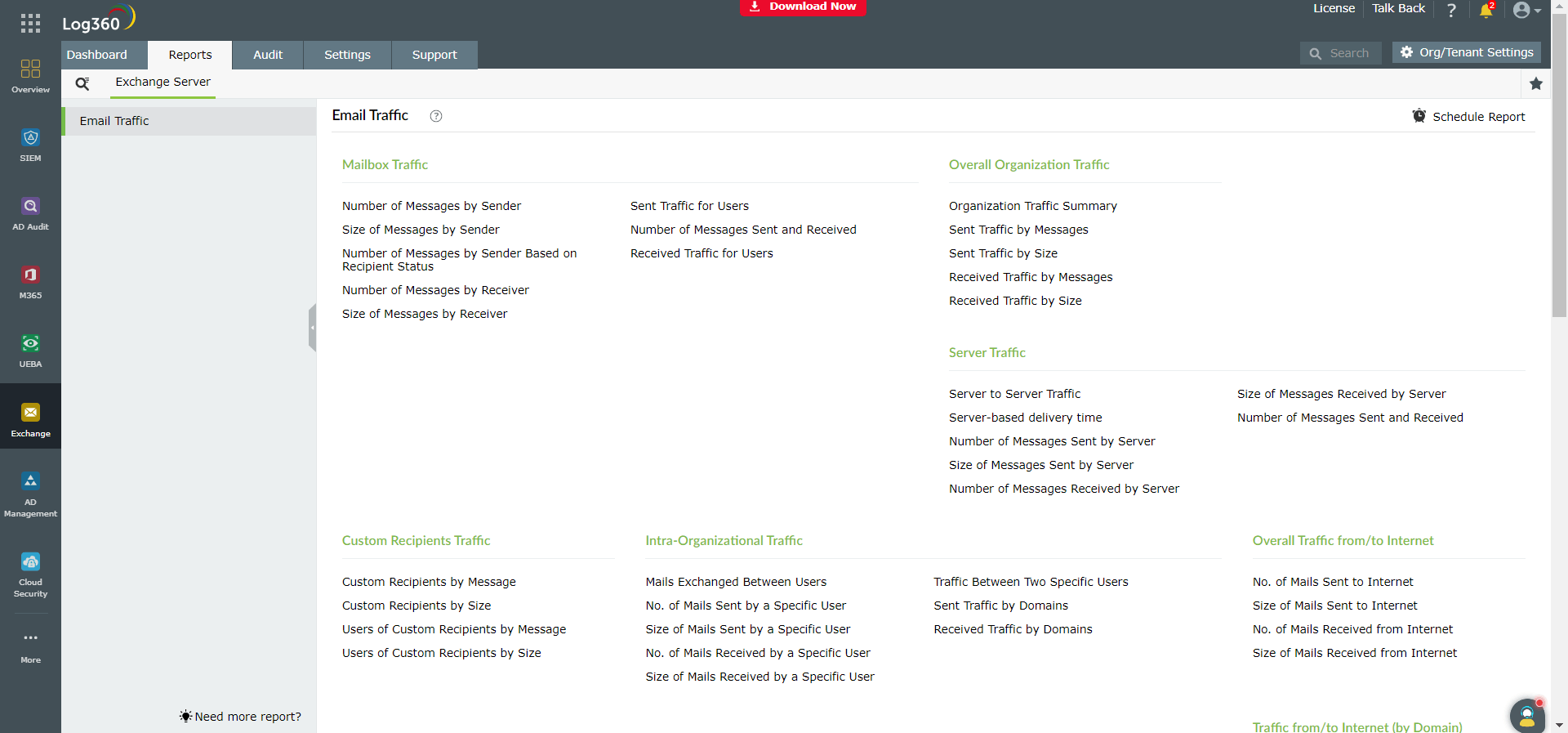

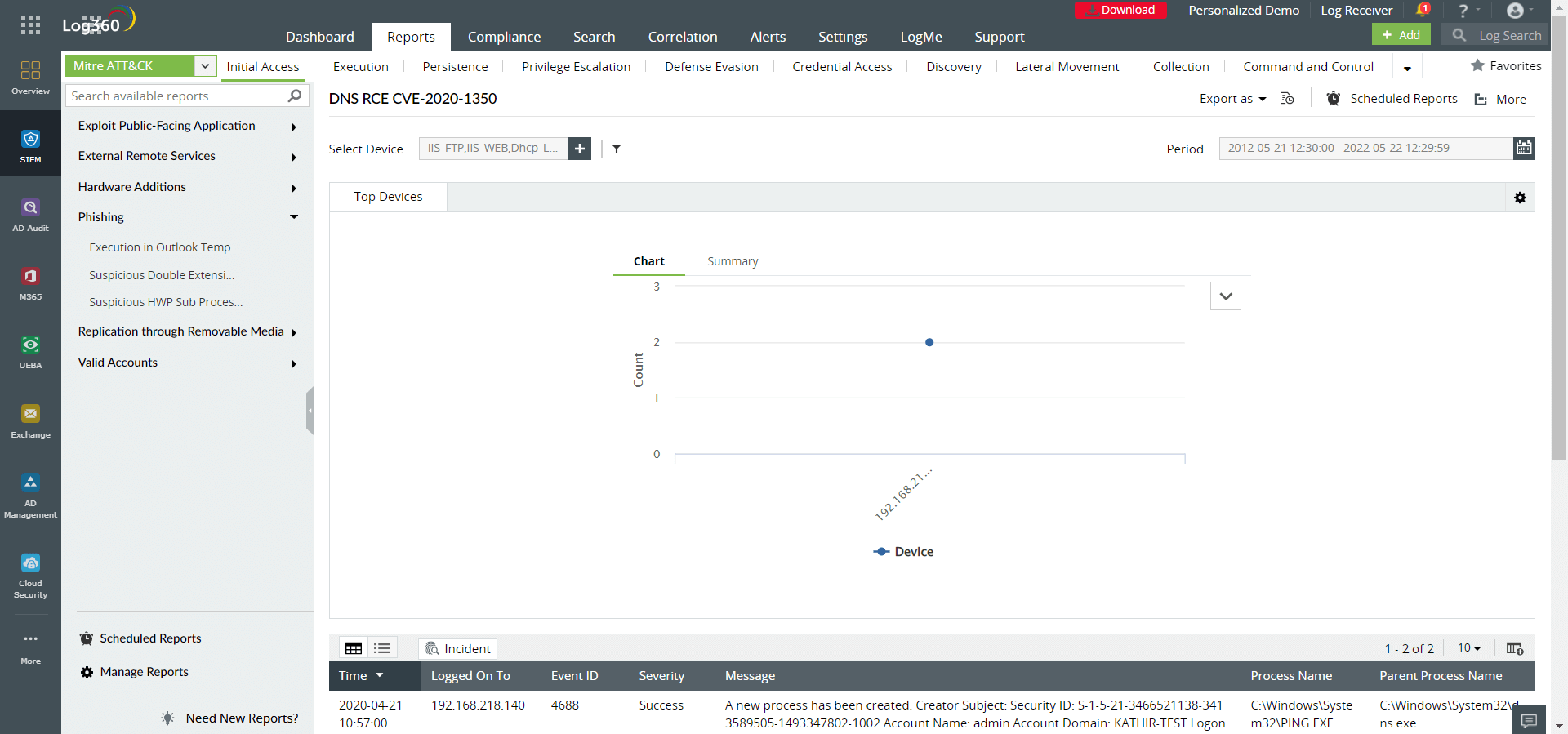

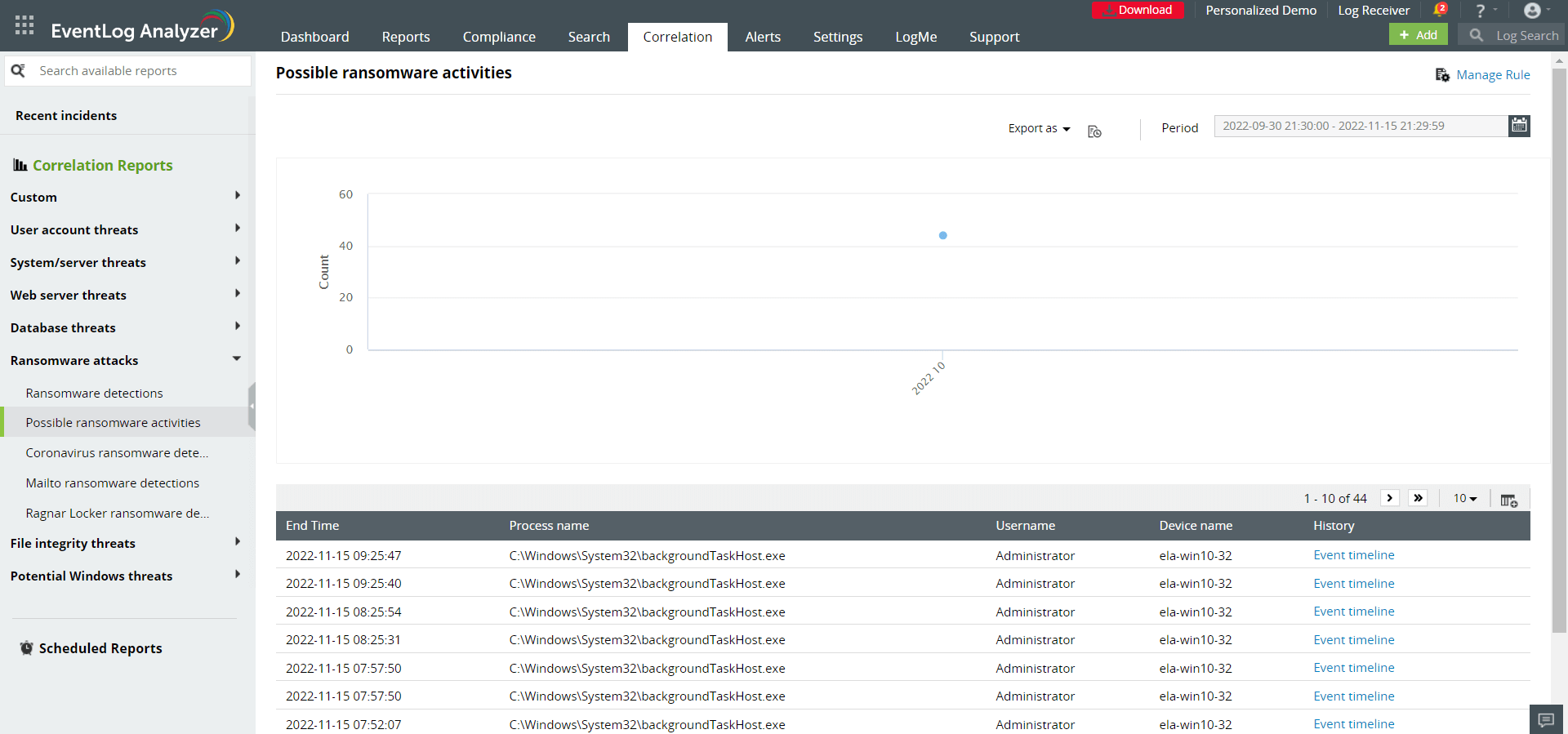

A SIEM solution like ManageEngine Log360 comes equipped with reports that can help security analysts easily spot malicious activities that could indicate phishing or ransomware attacks. These include reports like:

For more information about how a SIEM solution like ManageEngine Log360 can help you detect phishing or ransomware attacks, you can sign up for a free, personalized demo with our product experts.

You will receive regular updates on the latest news on cybersecurity.

© 2021 Zoho Corporation Pvt. Ltd. All rights reserved.