What top organizations implementing MLOps have in common: Real-world use cases

Summary

Top organizations implementing MLOps, such as Netflix, Uber, and Spotify, share several key practices and MLOps use cases that help streamline machine learning (ML) operations and maximize their impact.

First, these organizations prioritize end-to-end model lifecycle management, ensuring smooth integration from data acquisition to model deployment. This approach minimizes risks, accelerates innovation, and aligns ML with business objectives. Automation is another critical element; most organizations use tools to automate deployment, scaling, and continuous model updates, reducing manual intervention and enhancing performance consistency.

Lastly, scalability, flexibility, and cross-functional collaboration are essential. Leading MLOps adopters invest in robust infrastructure for large-scale data handling and foster collaboration between teams, ensuring models align with business goals. Continuous learning and iteration also drive ongoing improvements, ensuring models evolve with changing data.

In today's digital-first economy, organizations are increasingly exploring MLOps use cases to accelerate innovation, improve operational efficiency, and achieve a competitive edge. Leveraging MLOps use cases at scale is becoming increasingly important for innovation, operational efficiency, and gaining a competitive advantage. However, from managing the sheer volume of data to ensuring models are reliable in the production environment, implementing ML models at scale presents significant challenges.

To tackle the challenges of implementing machine learning (ML) to its fullest potential, organizations are turning to machine learning operations (MLOps): a practice that combines ML, DevOps, and data engineering to streamline the entire ML life cycle.

Top organizations like Netflix, Uber, and Spotify have become benchmarks in the successful implementation of MLOps. While the approaches followed in these real-world MLOps examples vary, there are several overlapping strategic practices that can offer key insights on enterprise MLOps workflows for IT decision makers looking to enhance their MLOps implementation and ROI.

Top MLOps use cases and best practices driving real-world innovation

Managing the complete MLOps lifecycle: From development to deployment

Effective MLOps implementation strategies often focus on comprehensive management of the MLOps model. End-to-end life cycle management is not just a "nice-to-have" MLOps use case or technical requirement but a strategic imperative.

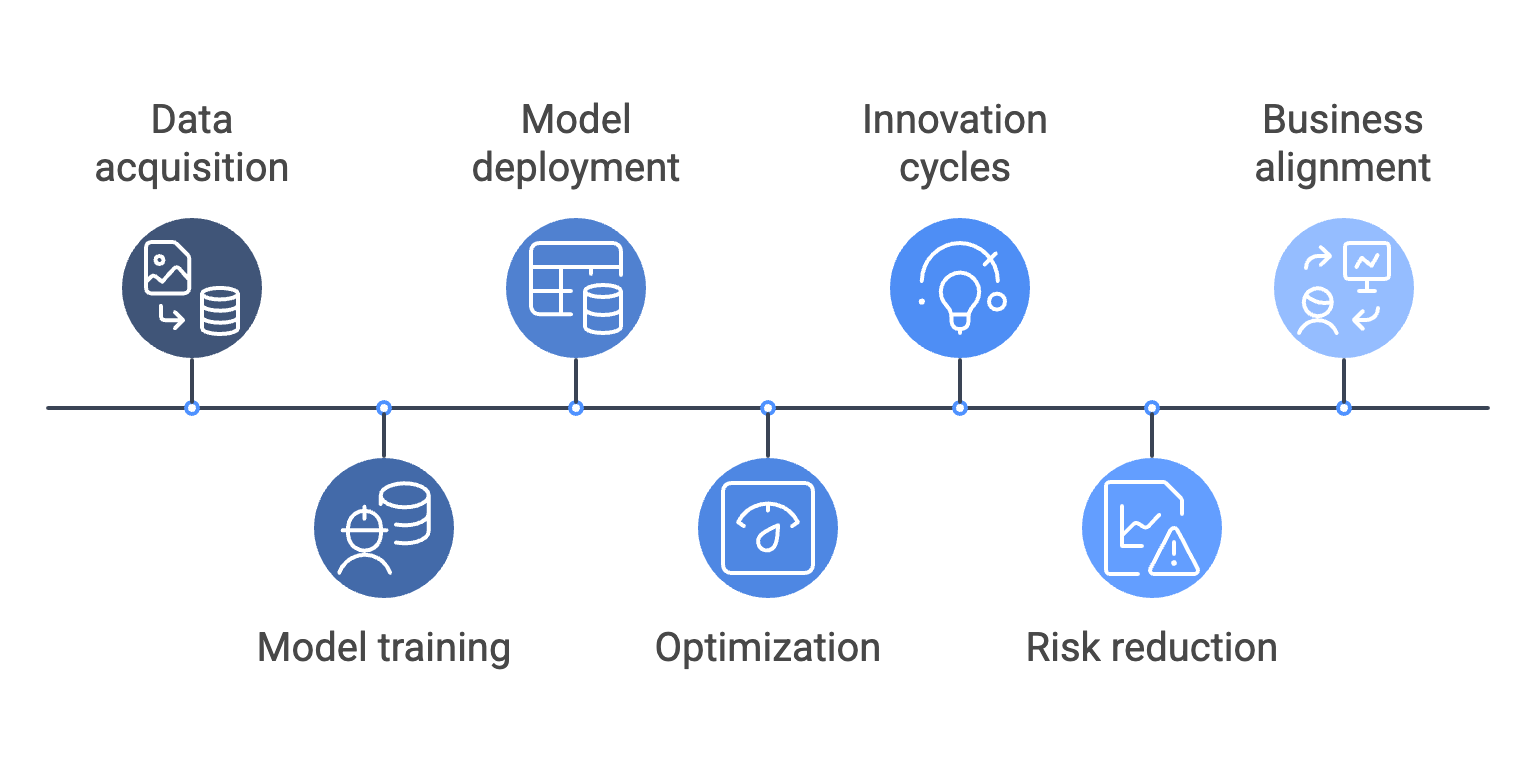

Top organizations implementing MLOps interconnect the ML model's life cycle stages, from data acquisition to model deployment, and optimize them for efficiency and scalability. This accelerates innovation cycles, reduces operational risks, and aligns ML initiatives with broader business objectives.

For instance, Uber’s Michelangelo platform integrates the entire ML workflow, enabling rapid iteration and seamless deployment of the model across its global operations.

Technical insight: Implementing an end-to-end MLOps framework requires robust network orchestration and MLOps implementation tools that can handle the complexity of modern ML workflows. To handle MLOps deployment challenges organizations require tools for version control, experiment tracking, and automated testing, all of which are critical for maintaining the integrity and reproducibility of ML models.

Automating deployment and monitoring in large-scale MLOps

Another powerful MLOps use case is automating model deployment and monitoring to minimize human intervention and improve time-to-market. Automation enables teams to focus on high-impact activities like innovation and strategic alignment.

Netflix and Google utilize advanced MLOps automation pipelines to ensure consistent performance across diverse environments. For instance, Google's MLOps practices leverage Kubeflow, an open-source tool that automates the deployment and scaling of ML models in Kubernetes, enabling continuous integration and delivery.

Technical insight: Automation in MLOps includes automated feature engineering, hyperparameter tuning, and model retraining. Use tools that enable the automation of these processes, ensuring that ML models remain robust and adaptive to new data.

Scalability and flexibility for enterprises

For large enterprises, MLOps scalability is a critical use case in competitive landscapes, so IT decision makers should ensure that MLOps strategies are designed with these qualities in mind. This involves investing in the right infrastructure and fostering a culture of agility and experimentation, allowing teams to quickly adapt to changing business needs and technological advancements.

Airbnb and other leading MLOps implementers have built MLOps frameworks that can handle vast amounts of data and complex workflows, enabling them to deploy models across multiple regions and platforms without compromising on performance.

Technical insight: Achieving scalability in MLOps requires a robust infrastructure that can handle distributed computing and large-scale data processing. Technologies such as Apache Spark, Kubernetes, and cloud-native solutions play a critical role in enabling organizations to scale their ML operations seamlessly.

Scaling MLOps with cross-functional collaboration across teams

Effective MLOps is inherently cross-functional, requiring collaboration between data scientists, DevOps engineers, IT teams, and business stakeholders. Fostering cross-functional collaboration and collaborative MLOps workflows is crucial for bridging the gap between ML innovation and business outcomes. By creating integrated teams that bring together diverse expertise, organizations can ensure that their ML initiatives are aligned with strategic goals, leading to better decision-making and higher ROI.

At Microsoft and Spotify, cross-functional teams work together to align ML projects with business objectives, ensuring that the models are not only technically sound but also deliver measurable business value.

Technical insight: Facilitating cross-functional collaboration in MLOps often involves adopting collaborative practices that enable seamless communication and integration between different teams. These practices foster shared ownership of ML projects, enhancing transparency and accountability.

Driving continuous improvement in MLOps environment

MLOps is an iterative process that requires continuous learning and improvement. By ensuring continuous MLOps training, feedback, and iterating on ML models, companies can adapt to new data, refine predictions, and ultimately drive better business outcomes. This involves not only investing in the right tools and technologies but also encouraging a mindset of experimentation and learning within the organization.

Technical insight: Continuous learning in MLOps requires versioning, experiment tracking, and automated model updates. This enables teams to iterate on models quickly, improving their accuracy and performance over time.

Forward-thinking organizations are turning MLOps use cases into tangible business advantages by operationalizing ML at scale. From automation and collaboration to lifecycle management, the practices above define the new standard for machine learning success.

For CXOs, the key takeaway is clear: MLOps is not just a tech upgrade but a strategic enabler. By adopting these best practices, your organization can harness the full potential of ML, driving innovation, operational efficiency, and ultimately, competitive advantage.